Scientific Computing III

Class Information

This course in computational techniques for solving physical problems is for students who have taken PHYS 3511 Scientific Computing II or had extensive previous programming experience. This course covers advanced topics in computational problem solving such as machine learning, probability density function optimization, and Baysian statistical methods, GPU programming. The instructor may add additional topics of interest.

Introduction to Machine Learning

Definitions of ML

- Arthur Sammel (1957): Gives computers the ability to learn without being explicitly programmed

- Tom (1998): A computer program is said to learn from experience,

- If performance on

- If performance on

ML Algorithm Types

-

Supervised Learning: Give an exact target for each sample during the training process

-

Unsupervised Learning: You don’t give the machine an exact target

- Never give any label during training

- Machine finds patterns in data on its own

-

Reinforcement Learning:

- It can use supervised and unsupervised algorithms

- Good for well-defined “playground”

- Reward good behaviour and punish bad (Learn from mistakes)

-

Recommender System: e.g. Youtube recommendations

Supervised Learning

Provide machine with “right answers” train the machine to predict the unknown target

- Regression: Predict continous valued outputs

- Classification: Predict discrete valued outputs

Example 1: Housing Price Prediction

- Cost ($) as a function of Size (Sq ft.)

- Can be done with regression (linear or nonlinear)

- Cost ($) as a function of Size and Rennovation Cost

- Multivariable problem with vector representing state value for given input

Example 2: Cancer Diagnosis

- Output (has_cancer): 0 or 1

- Input: Tumor Size

- Problem: Find boundary between “cancer” and “not cancer”

- This is a type of classification problem

Unsupervised Learning

- ML finds patterns in data on its own without explicitly labeling training data with a target

Examples

- Points on a plane, find clusters

- Cluster customers into different groups

- Astronomial data analysis: Find clusters in star/galaxy photo

Regression Problem

- Regression Problem: Predict real-valued output

- Training Set: Data where you know the target value and value for the feature for each point

- Feature = parameter = input variable

- Target = output variable you want to predict the value of

- Let

- Let

- Let

- The learning algorithm generates a hypothesis map/function,

- Univariate linear regression: Linear regression with one variable (one feature)

Linear Regression

-

Choose slope (

-

Hypothesis function

-

Function to minimize

-

We use the difference squared since if it wasn’t there could be both positive and negative differences which would lead to a minimized function that isn’t that good

-

Cost function

-

We want to minimize the cost function

-

Consider the case when

- For

- For

- For

- For

- For

- Graphing

- For

-

Considering the multivariable function

Gradient Descent

- To find the minimum for

- Make sure to only update

- Learning Rate:

- If

- If

- If

- If there is a local min as well as a global min for

- One solution is to use a larger

- A better solution is to add a “Kinetic Term”. Consider a particle in a well and add kinetic term so particle can oscillate and get out of the well (local minimum)

- One solution is to use a larger

Gradient Descent for Linear Regression

- Gradient Descent for Linear Regression will always converge since

- For j = 0

- For j = 1

- Since we’re not going to use a lot of data, we can use all the samples for each step of GD (“batch processing”)

Linear Regression with Multiple Features

Example: Housing Price

| Size | # of Bedrooms | # of Floors | Age of Home | Price ($K) |

|---|---|---|---|---|

| y | ||||

| 404 | 5 | 1 | 45 | 460 |

| 1416 | 3 | 2 | 40 | 232 |

| 1534 | 3 | 2 | 30 | 315 |

-

-

-

-

Hypothesis:

- Previous:

- Now:

- Previous:

-

For convenience of notation, define

Pseudocode for Multivariable Gradient Descent for Multivariable Linear Regression

Eg:

Eg:

Feature Scaling

- Examples

- The problem with the above means there might be problems with gradient descent due to how different the ranges are for each feature

- If you graph

- If you graph

- Features have to be on the same scale, roughly

Mean Normalization

-

Let

-

-

Using the above for the housing price example

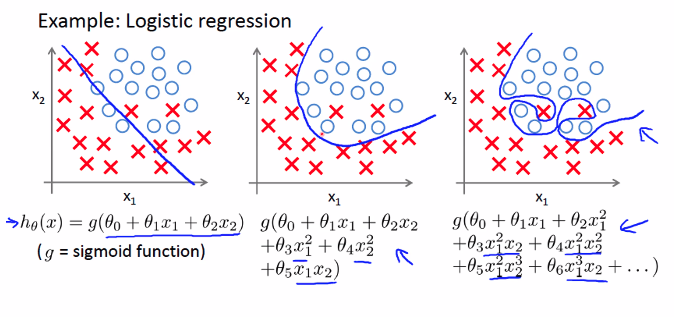

Logistic Regression - Classification

- Not really used for ML, but an important example of something that gives a non-linear relationship

- Linear Regression doesn’t work for classification problems (Email Spam, Benign Tumor/Cancer)

- Gives wrong predictions

- Linear regression doesn’t confine the values to a specific range

Example: we have data that is either 0 or 1

- We want

- Define the following

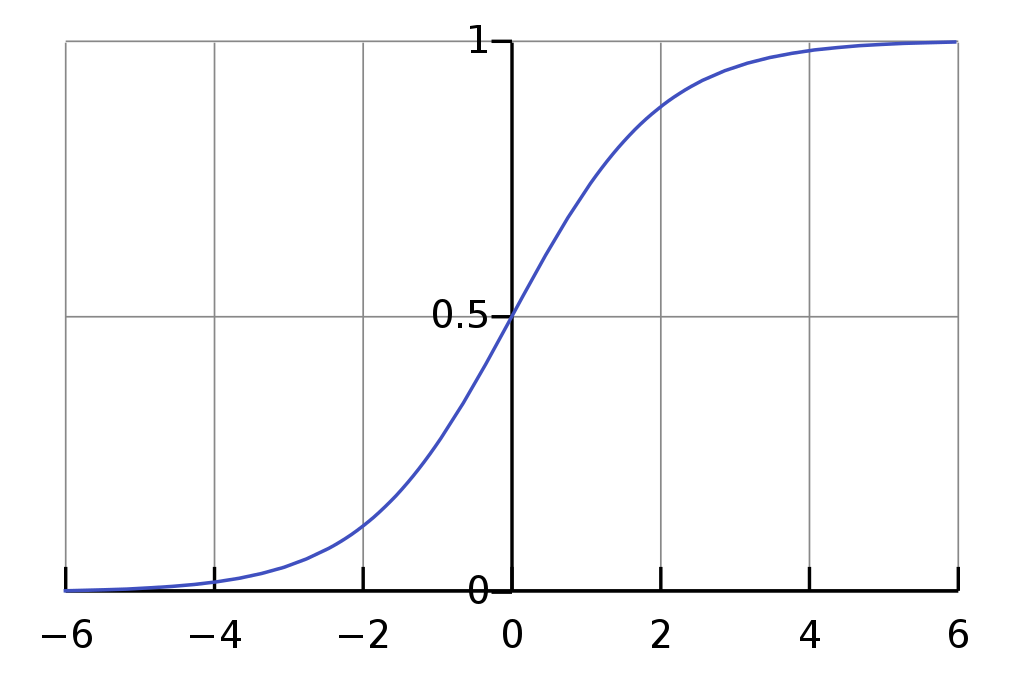

The Sigmoid Function/Logistic Function satisfies this:

Note that

Interpretation of

Example

We’re classifying tumors based on size. 1 = Tumor is cancer, 0 = tumor is benign

If

Statistical Understanding

Probability that

Note the following is true:

Decision Boundary

Suppose

For the g(z), sigmoid/logistic function:

The Line formed by

Non-Linear Decision Boundary

The above decision boundary example was linear

-

Imagine a data set such that the data is separated into two parts by a decision boundary that is a circle

-

Example: Radius of circle is 1

General Decision Boundary With a Lot of Linear Terms

- The problem with this general problem: What if the decision boundary is simple like a linear line? How do we know beforehand that the decision boundary is linear? We’ll make the decision boundary overly complex with the extra terms if we use the general solution above. Overfitting to be covered in later sections.

Cost Function

where

- How do we choose parameter

- For linear regression we saw

- Unlike in linear regression, if we choose

- So we have to choose a different cost function and different

- Unlike in linear regression, if we choose

Choose

The following does what we want

- Consider the case when

- Since the hypothesis function,

- Since the hypothesis function,

Gradient Descent for Logistic Regression

- Recall that the gradient descent algorithm is the following:

- It turns out the gradient descent algorithm looks exactly the same as for linear regression, just replace

Conversion to Matrix Form

Note:

- If we have two column vectors,

In the gradient descent algorithm and cost function,

- This is computationally intensive

- Instead, we can convert the summations into matrix multiplication or dot product of column vectors since matrix multiplication/dot products are faste rto compute (especially with libraries like numpy)

Define the following

In this example,

Note that the matrix of training data,

Important Matrices and their Dimensions

Then we have the following equivalencies:

Gradient Descent Algorithm in Matrix Form

We can then rewrite the gradient descent algorithm as follows:

Other Optimization Algorithms

Conjugate Gradient

- Popular a lot for Computational Chemistry/Material Science (DFT)

- Google “BFGS” and “L-BFGS”

Advantages

- No need to manually pick

- Often faster than gradient descent (GD)

Disadvantages

- More complex

- A lot of other parameters

- Parameter akin to Speed of particle on potential

- Paremeter akin to Random motion of particle

Multi Classification Problem

- Instead of just a binary classification (yes, no), what if we want to classify data into more than just two categories

Example

- Email Tagging: Classify inbox into Work emails (

One vs. All (One vs. Rest)

- You can reuse the binary classification algorithm to classify data into

- Then afterwards, classify the

- Repeat

OverFitting

- Options to address overfitting

- Reduce number of features

- Manually select which features to keep (requires domain knowledge to know which ones to keep and which ones are less important)

- Model Selection Algorithm

- Regularization

- Want to make

- Want to make

Gradient Descent for Logistic Regression

Repeat {

}

K-Means Cluster - Unsupervised Learning

Uses

-

Film Recommendation

-

Social Network, Finding similarities between users

- Represent each user as node in graph, with connection as edge

-

Materials Science, represent each material as node with properties as edges

-

Cluster

Algorithm

- Input

- Number of Clusters (K)

- Training Set:

-

Randomly pick

- Sometimes more efficient to choose

- Sometimes more efficient to choose

-

Repeat {

// Cluster Assignment

for i=1 to m:

// Move Centroid

for k=1 to K:

}

Example:

- Suppose

- Then

Optimization Objective

- Want to minimize

- Minimize J with respect to

- Minimize J with respect to

- Minimize J with respect to

Random Initialization

- Randomly pick

- Set

What is the right value of K

- Graph of J vs. K should give you an obvious “elbow” point (drops steeply) and then drops smoothly and less steeply afterwards

-

The

-

However, sometimes the graph doesn’t drop steeply. This means you have too many features or that the K-means algorithm itself is not a good algorithm to use in this case

-

PCA (Principle Component Analysis)

- If we have too many features (too many dimensions) when doing K-means algorithm, we can reduce to fewer dimensions

- PCA, Kernel PCA, ICA: “Powerful unsupervised learning techniques for extracting hidden (potentially lower dimensional) structure from high dimensional datasets”

- In the data cleaning step of workflow

Uses

- Visualization

- More efficient (time, memory, etc.)

- Fewer dimensions implies better generalizations

- Noise Removal

Idea

- Want to pick the most important features (principle components)

- Project the data onto the principle component axis(es) so that variance among data is maximized

- Alternatively: Find a direction (a vector

A Tutorial on Principal Component Analysis by Jonathon Shlens

Algorithm

- Reduce from

- Find

- Compute"Covariance Matrix”,

- Calculate eigenvectors of the covariance matrix,

- where the columns of

- First column of U,

- Second column of U,

- And so on until

- First column of U,

- where the columns of

Example: Supervised Learning Speedup

*

-

Suppose there are 1000 features

-

Extract inputs and unlabaled dataset:

-

Do PCA to reduce dimensions from training set

-

New training Set has features reduced:

Difference/Connection Between SVD and PCA

- PCA transforms data linearly into a new set that are not correlated (orthgonal)

- SVD is a method in linear algebra used to diagonalize a matrix into special matrices

- We use SVD to find PCs (Principle Axises in PCA)

Matrix Diagonalization

-

For some square matrices,

-

where each column in

-

And

-

Singular Value Decomposition

For any matrix,

-

-

-

-

-

-

-

-

-

SVD states that any matrix

-

Each

- we can order the eigenvectors so that the higher eigenvalues come first

- Higher eigenvalues mean larger variance (so more important PC)

- You can then project the data onto the first few PCs since the

Neural Networks: Non Linear Classification Example

-

Using a neural network is a solution for non linear regression or classification problems with a large number of features

-

Using the sigmoid function is not feasible since the number of nonlinear terms in

Neuron Model

- Each neuron can have multiple inputs (can be from other neurons). Depending on the current strength, the neuron fires

- Non linear activation!

- Inputs:

- The wires coming into the neuron are how heavily weighted each input is, in other words,

- The output is

- The sigmoid function is the activation function

- The wires coming into the neuron are how heavily weighted each input is, in other words,

Example

Forward Propogation: Vectorized Implementation

The general steps for forward propogation:

-

You can cut unimportant features with small weights. Called “dropout”

-

-

If instead of each

Example: And Gate

Activation Functions

- Sigmoid Function (from 0 to 1)

- Hyperbolic Sin (from -1 to 1)

- Rectified Linear (relu)

- Softmax

- Can be viewed as a screening function

- Picks out the most important/dominant components of a vector and assign larger values to those components

Bayesian Statistics and Naive Bayes Learning

- Sometimes we don’t have the “big data” needed for the algorithms used above

- If you don’t have a lot of data, you can use Bayesian Statistics and Naive Bayes Learning

- But if you do have a lot of data, use the above big data techniques

- Example: Recommender System

- Asks the Question: What are the features like if

Conditional Probability

Example

The % of adults who are male and alcoholics is 2.25%. What is the probability of being alcohol, given being a man?

Bayes’ Theorem

Example

What is the probability of a family with two girls given that the family has at least one girl?

- GG, GB, BG, BB

- 3/4 of the above cases have at least one girl

- 1/4 of the cases above have two girls

- P=1/3 for probability that a family has two girls given at least one girl

- Now using Bayes’ Theorem

Using Bayesian Statistics for Recommender Systems

- Suppose we know

- Given new

Example

Question: What is the probability that for a new sample

Naive Bayes Example: Text Classification

- Given: Feature vector

- The feature vector describes the number of occurences of a word or probability of the word appearing (sort of like a hashmap)

- For this example:

- Then compare

- e.g.

- e.g.

Atomic Simulations

Why Simulate?

- “Computers don’t solve problems, people do” (Frank Jensen)

- Simulations rarely simulate reality

- Simulations mainly compute quanitites that will either prove or disprove a theory

Energy Models

- Empirical Models (parameters fitted to experimental data)

- Fastest

- Many body potentials

- Pair potentials

- Semi-Empirical

- Tight Binding

- Quantum Mechanical (start from Schrodinger’s equation and make approximations)

- Slowest

- Quantum chemistry

- Based on atomic orbitals

- Density Functional Theory (DFT)

- Quantum Monte Carlo

Born Oppenheimer Approxmation

- All atoms characterized by coordinate vector

- System characterized by wavefunction

Born Oppenheimer Approximation

- The wavefunctions of the nuclei and electrons can be treated separately since the nuceli are a lot heavier than the electrons

Pairwise Energy Summation: Pair Potential

Formation Energy of Crystals

- Amount of energy that holds a crystal together

- Formation energy is always negative

Density Functional Theory

- All system properties can be represented as a function of electron density, which in turn can be represented by wavefunctions